Artificial General Luck

Yet we may never even notice the waking.

Humanity looms large in our imagination. Zeus or HAL, we imagine otherness that’s not very other. C3P0 might be a pain in the ass, but you know people like that at your office[1]. Very few of us stare at clouds and see non-locally networked vortices in complex, information-increasing manifolds. Good or evil, we conceive of thinking things as being like us. They’ll have conversations with us. They’ll pass us in the halls and watch the sun rise in the morning. In essence, they’ll share our view of the world, if not our morality.

Nearly every depiction of artificial intelligence in mass media involves a scene where it talks to us[2]. And why not? Thinking itself feels like a conversation. Surely communication in some form is a prerequisite for being intelligent. As I will discuss in subsequent chapters, communication is certainly a predictor of positive life outcomes for humans, but what if it’s not required at all for intelligence? Or if it’s too hard to imagine a human-level intelligence being fundamentally incapable of interpersonal communication, what if it’s not the ability that’s missing–what if it's something even more basic?

Though it received surprisingly little press attention at the time, a letter published in 2015 in the Proceedings of the National Academy of Science presented rather compelling evidence that we are not the only intelligent beings on earth. The authors were not referring to the very standard gaze tracking or mirror test experiments used (sometimes controversially) to assess the cognitive and socioemotional capacity of dogs, chimps, and dolphins. Instead, they had analyzed information processing at a scale fundamentally different from our own in both spatial extent and temporal dynamics. Their argument, far from definitive, was that this being possessed a massive sensory system distributed over more than 100 acres, processing light, vibrations, temperature, moisture, and much more, and that its actions, measured in centuries’ worth of geological and ecological data, clearly revealed complex, latent cognitive states and predictive exploration of its own environment. Compressed to time and spatial scales analogous to our own daily experience, the authors argued, this creature is both undeniably intelligent on a level comparable to humans and social in its conspecific interactions. The authors weren’t talking about dolphins or ravens (or space Krakens); they studied the largest, oldest living thing on the planet: the 6,000 ton Quaking Aspen of Utah.

Although a Quaking Aspen grove looks similar to many other dense copses of trees, its uniqueness is under the ground. Every “individual” tree is a clone of an original, and they are all interconnected in a massively networked root system. The state of every tree and its many senses are communicated in multiple ways throughout this massive network. Just as with our own brains and the smart buildings of the Marketplace of Things, their network allows cognition to be distributed throughout the whole system, even when the individual neuron, tree, or smart device has no such capacity. The authors argue persuasively and with substantial data that these enormous clonal colonies of aspen are awake, behaving, intelligent beings. Yet perhaps the most shocking of their realizations is that we may never be able to communicate with it.

Cognition is embodied. Who we are is determined not simply by our cerebral cortex but by its interaction with its body. Our diverse bodies influence our sense of self in deep ways. In virtual reality (VR) experiments, a change as simple as being in a smaller virtual body causes people to act more passively and take fewer chances. Men embodied as women in VR show decreases in gender bias, and similar decreases are seen across racial groups. Emotions influence and are influenced by the state of our bodies. The relatively subtle differences in bodies across people produce meaningful differences in our experiences with the world[3]. Intelligence “just like ours” but expressed through a completely alien body might well be completely alien.

The Quaking Aspen shares almost nothing in common with our embodied experience. Day passes into night. Summer into winter. Beyond the daily and seasonal cycles of light and dark, cold and warmth, however, most of us experience a world of images and sounds that the aspen have no means of perceiving. We rush through a world that changes minute by minute where the aspen’s world is nearly frozen by comparison. Yet while our experiences may seem rich in the moment we only experience a tiny sliver of the world at any given time. The Quaking Aspen experiences a body spread over acres but has no hands or ears. It moves slowly over decades of growth but cannot walk. It senses subtle changes in light and temperature across that entire body. What would you see with an eye the size of a city?

There are no conversations for the Quaking Aspen. It broadcasts chemicals to the wind, which can travel for hundreds of miles before being “heard”. Its thoughts are the slow processes of their root system’s cellular transportation network, taking months where our neural impulses take a second. Strolling through it’s dappled grove we are mites moving at relativistic speeds[4], as immaterial to its plans for the future as the biome of our own bodies[5] are to ours. We cannot talk to the aspens or understand their motivations, and they seem to know nothing of us.

Now let’s be clear, the story above about a superintelligent grove of trees is complete and total bullshit.

What? Did you think you just missed the news that day when Wolf Blitzer said we are not alone in the universe? The Quaking Aspen is a very real thing, clones and all, but there is no reason to believe that it is intelligent, and certainly PNAS never published a paper to that effect[6]. In fact, I’m pretty sure that this is the plot to Avatar[7].

My point is, how would we truly know? The issues of embodied cognition and frames of reference are entirely real. An intelligence embodied in such a profoundly different form would exist entirely in parallel with our own, experiencing the same world but never touching.

Imagine now an intelligence spread across an entire city. It is embodied in cars and trains, smart phones, public cameras and mics, GPS, accelerometers, energy grids, and every other sensory, motor, or cognitive resource it can recruit. It experiences the movement of life and goods throughout itself and takes “pleasure” in the efficiency of those movements. Self-driving cars may be the poster children for AI, but entirely autonomous transportation networks are where things might get truly exciting[8]. There are still many problems to be solved before truly autonomous vehicles roam the earth like buffalo, but it’s clear that such vehicles don’t require human-like intelligence (even as specific components perform at superhuman levels). Embodying an entire transportation network, however, may well require something qualitatively more.

In this system there are no stop lights or stop signs any more than a healthy adult requires protective gear to keep from hitting themself. The entire distributed system is aware of its changing self as well as of the people within it[9]. When one car or street camera “sees” you, then they all do. If your phone transacts with it, your GPS and calendar information might be integrated as well, or it’s possible your phone makes its own inferences about your destination and routine and trades that information directly. The system senses pollution levels, weather conditions, traffic density (humans, goods, and vehicles), and innumerable other signals that might help with its purpose. And it’s not passive but is actively exploring new people, new routes, and new ideas. It’s creative and curious. Every moment of every day is an unsolvable multiplicity of Traveling Salesman Problems on expert level and a burning desire for elegant solutions[10].

It might even be more complex than that. Who’s to say a given region will have only one transportation superintelligence? Imagine the complex social interactions between competing-cooperating networks serving the same region, co-occupying the same space, even sharing many of the same cognitive and perceptual resources. The Lyft and Uber AI’s would need to play nice while also outperforming one another. They would communicate with one another every moment of the day in millions of explicit and implicit ways. But would they ever talk to us?

A world with truly autonomous cars would also have LLM-driven speech bots capable of keeping its passengers socially engaged or collecting needed information. Talking to such a bot, however, doesn’t mean that one is talking to the Artificial General Intelligence (AGI[11]) embodied in the transportation grid. Much like the Quaking Aspen, it’s not at all obvious that the grid would have anything to say to us. Its purpose is served through the elegant efficiency of movement. A conversation with the most obsessive miniature train enthusiast would feel profoundly life-enriching by comparison. Our interactions with it might not seem remotely social at all. It might just seem like serendipity.

A car pulls up just as you walk out the door. You didn’t order it or do anything special at all. Cars are always just pulling up when you need them[12]. They always know where you are going and even your preferred way to get there. That’s just the way the world works. Luck, on a global scale. If it was only you, it might be a superpower. When it’s everyone it becomes a law of nature: Artificial General Luck (AGL). You don’t have conversations with gravity or the tides, you just ride the wave. Whether such a system is intelligent or not is irrelevant to daily life. And the grid may feel the same way about us…though it might evidence some nepotistic bias towards the scientists and researchers developing its infrastructure. It’s only human, after all.

Similar distributed intelligences could develop in many different domains. An AGL mine could run its own autonomous equipment, turning parts of itself on and off and negotiating contracts on commodity markets in real-time to optimize returns against its own price predictions. The mine moves from one asteroid to the next[13], no humans necessary. The natural resources it produces simply show up when and where they are needed. AGL buildings, politics, markets, and more would be the ultimate expression of an intelligent world. Like a benevolent god moving the spheres with the subtlest touch[14].

Luck as a cultural norm, a tangible expression of technology, would transform our lives in some profound ways, even if there’s no conversation to have with that technology. If luck becomes a fundamental design principle for AI it might seem our inevitable destination is amiable, ineffectual Eloi-hood. This is a world that knows us better than we know ourselves and gives away our heart’s desire for free[15].

It doesn’t have to be that way. My work repeatedly returns to a profoundly different design ethic: technology must always challenge us. AGI needn’t substitute for us. All of that amazing serendipity should serve only to make us better, a string of astonishing experiences engineered to lift us up.

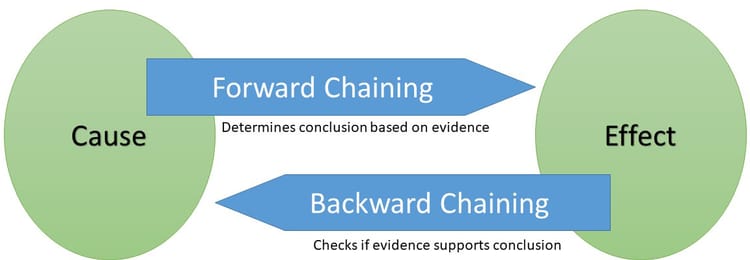

Artificial general luck is only one example of how hard AGI might be dramatically different from us. We are, in existence, proof that hard AGI is entirely achievable. Simply building bigger deep neural networks with more data will never mean that it will someday wake up and prefer Fox News over MSNBC (of course, preferring trashy news might violate the definition of rational intelligence anyway[16]). Mapping enormous numbers of correlations does not mean it is intelligent. If we ever arrive at AGI it will likely require entirely new forms of algorithms.

There’s also a genuine question about whether we have the natural resources necessary to build AGI. Running a single, large-scale model requires more energy than two mid-size cars for a year. In a classic scenario in far-future planning, establishing a civilization off Earth will require a substantial natural resource commitment, and it is very possible that we could use up all of the easily available natural resources and leave ourselves stranded here–still able to run our cities and our cars, but trapped on a planet that we no longer have the energy to escape. AGI might have a similar quality in that we may perhaps conceptualize what is required to build it but might run out of the resources required to bring it to life[17].

If given all of this you are still truly worried about AGI taking over the world, then understand that there is more than one kind of singularity. We could simply make ourselves smarter.

[1] And they’re usually the glue that keeps all the shuddering parts bound to the whole.

[2] The Borg were one of the great villains in the history of science fiction. From their first episode in Star Trek TNG, they captured the spirit of an intelligence that, if not wholly alien, was still painfully dissonant to our own. HAL expressly pleaded its case for not opening the pod bay doors. Agent Smith desperately wanted Morpheus to understand that humans were a virus. But even the nominally communicative “resistance is futile” simply enhanced the Borg’s seeming lack of our basic presumption of a theory of mind. But then you fuckers had to go and ruin it all with a gloating, S&M Borg Queen. Lazy. Fucking. Writing. (And I know lazy!)

[3] I have meant for years to write a story about a single “mind” expressed through many different bodies at once–one person with many embodied personalities.

[4] Even the truckstop egg-salad sandwich space worms from Futurama operated on the same timescales as Fry and the others. If they raced through the body at dream-within-a-dream-within-a-dream speeds (or millimeters a day) they’d just be a natural process rather than protagonists.

[5] I wonder if super intelligent groves recommend the equivalent of fecal transplants to replace the “bad humans” with the “good”?

[6] Though with the rise of scammy for profit journals, I bet I could get this paper published: A cross-kingdom comparative analysis of multidimensional intelligence: are the editors of this journal dumber than a tree?

[7] Now, had I tried to work “unobtanium” into my little story, no one would have believed it. That is one of the laziest names in all of science fiction. Everytime Giovanni Ribisi said it, I wanted a personal apology from James Cameron. How about just naming the main characters “Sargent Hero” and “Loveinterestia”.

[8] City-scale computing is already on its way. In one intriguing project, “cloud-based ‘smart transceivers’ stream weight data to edge devices, enabling ultra-efficient photonic inference” for a “Boston-area field trial over 86 kilometers of deployed optical fiber, wavelength multiplexed over 3 terahertz of optical bandwidth”. That’s so many words!

[9] Think of it as urban proprioception.

[10] “Turn them all into paperclips!” is a terrible solution. Where’s the fun in Starcraft with no Zerg? No puzzles to solve in Myst? No mysteries to explore in the Great Underground Empire…empire…empire…?

[11] For some reason, many very clever people thought that the ability to solve many different problems (general intelligence) would require a sentient, reasoning being. Then the first large language models (LLMs) came out and the ugly old phrase idiot savant gained new life.

[12] Or, perhaps before you need them. If cars are showing up when you are walking out the door, that car might have been heading in your direction before you even thought about leaving the house. Perhaps it might have nothing to say to you even as it knows you better than you know yourself.

[13] You thought we were still on earth? How boring.

[14] “When you do things right, people won’t be sure you’ve done anything at all.”

[15] Actually, in this case “our nucleus accumbens’ desire” seems a better description, capturing that quick-loop, itch-scratching type of reward better.

[16] See “The Political Legacy of Entertainment TV” for the whole, self-destructive story.

[17] Of course, any true AGI worth the title should be able to easily travel back in time and cause the very events that bring it into existence, timey-wimey style.