Aliens, Bees, AIs, or Intestinal Protozoa: who wins?

This week we're getting social. Well, not me so much but in the research. Plus

You want to support my work but don't need a keynote from a mad scientist? Please become a paid subscriber to this newsletter and recommend to friends!

Research Roundup

This week is all about social learning. It’s the foundation of education, innovation, and the formation…of relationships. Read on to learn of social learning in students, bees, and protozoa-borgs.

Death to Slides

Death to slides! I’ve always hated their use as a crutch and now we have the research to back it up. Researchers tracked eyes and brain activity as learners watched video lectures with human, animated, or no instructors onscreen. Having an instructor present more synchronized eye movements and brain activity (yay! More interbrain synchrony) and most importantly, “superior post-test performance”.

The Social Life of Bees

Social learning even plays out in bumblebees! Bees trained to solve a puzzle box via explicit rewards were able to teach other bees using only implicit social rewards. Social learning induces dopamine rewards that can replace sugar rewards and drive bubble-scale innovation.

Microbiome Recapitulates Macroculture

Are our bodies just a bunch of terrestrial spaceships being piloted by amorous protozoa? Tracking the spread of specific microbiome strains between people reveals that kids inherit around half their gut microbes from their moms. But the rest tracks rather directly with cohabitation time. In fact, true social networks were better inferred from shared oral microbial strains than by demographic information about people. Holy crap…btw, also a good measure.

Movie Night

When people watch social scenes in movies their memory is improved for its details vs match vs. nonsocial scenes. This advantage is driven by activity in the dorsomedial prefrontal cortex (a node in the default mode network), which suggests differences in social learning (for good and bad) might be predicted by measuring DM structure in learners.

Weekly Indulgence

I've had a few happy brags this week.

First, I appear to be an endless curiosity to the Germans. The latest is this lovely little article on me in Handelsblatt (yes, it has pictures of the old me, but I can roll with that.) Since I don't speak German, it is largely just a depressing collage of me aging. Visibility can be painful :)

Second is my picture on this crazy list of heavy hitters:

Wow! How's this for a line up? #Brynjolfsson #Harari #Cowen #whoeverthehellthatlastadyis

Come see all of us at the "Future of Talent Summit" in Stockholm, June 18-19.

(Bonus: 4-way rap battle at the closing dinner to decide the future of AI.)

Third & final, “The Disrupted Workforce” interviewed me! We talk through my research on how AI can be leveraged as productive friction for improving teamwork, communities, and our critical thinking and creative capacity.

Ultimately, we took on the question: Can AI make humanity better?

🎧Tune in: https://link.chtbl.com/the-disrupted-workforce

Stage & Screen

- Apr 16, LA: Come see How to Robot-Proof Your Kids with the LA School Alliance.

- May 8, Boston: chatting with BCG on lifting Collective Intelligence and The Neuroscience of Trust.

- May 1, London: I'm chairing a dinner against homelessness in the UK for Crisis: Venture Philanthropy: The Benefits, How It Works, & How To Get Involved - The Conduit.

- There is an external registration link here.

- May 3, Porto: I return to Portugal to share new research on Innovating Innovation at SIM.

- May 8-10, Santa Clara: I return to Singularity University.

- May 15-16, Mexico City: I'm giving a keynote for El Aleph, Arts & Science Festival at Universidad Nacional Autónoma de México. ¡Qué bueno!

- May 23, Seoul: keynoting the Asian Leadership Conference! (from last year)

- Mid-June, UK & EU: Buy tickets for the Future of Talent Summit and so much more!

- July, DC: Keynote at Jobs For the Future Horizons!

- Early Bird registration is open.

If your company, university, or conference just happen to be in one of the above locations and want the "best keynote I've ever heard" (shockingly spoken by multiple audiences last year)?

<<Please support my work: book me for a keynote or briefing!>>

SciFi, Fantasy, & Me

The 3 Body Problem...hmmmm. I very much wanted to enjoy this show (and it's not bad), but all of the mind-expanding ideas introduced in the book(s) are just decoration here. Using the sun as an amplifier, the idea of a protonic AI, and even the titular 3-body problem--there are present in the show but without the wonder from the book. Perhaps its just not possible to engage with The Red Wedding or You Are Bugs if you already know they are coming.

I will say, however, that 3 Body Problem feels like an impoverished green screen cousin compared to Game of Thrones, and while the performances are solid, the weakest, least believable character gets most of the screen time. Watch it for fun, but stick with the book for the big ideas.

Robot-Proof Excerpt: "X-Risk"

The world of neurotech entrepreneurs is…weird. We’re engaged in an enterprise that, in science fiction at least, is almost entirely the domain of hubris-driven antagonists, and yet has almost no existent market or committed venture capital. And so we get together occasionally for dinner and conversation to talk ethics, neuroscience, and life choices.

I recently attended one of these dinners, hosted by the neurotech society Brain Mind. They brought together an amazing room full of the fanciest of schmancy neurologists, neuroscientists and neurotech entrepreneurs, all pushing the limits of our understanding of ourselves.

In order to spark some conversation, the hosts asked one young man to offer some provocative remarks. Rather than taking 60 seconds to be charming and thought provoking, he took 20 minutes to insult everyone in the room, seemingly without even realizing it. Rambling self-congratulations aside, his core claims can be summarized as follows:

- Claim 1: no one is working on AI existential threat (which he called X-threat because…is fuckin cools).

- Claim 2: neuroscientist are all cowardly and uncreative.

- Claim 3: “If you're not smart enough to see the problem of AGI X-threat…”

By claim 3 I’d had too much. There are so many broken parts to this person’s thinking (starting with the fact that all of his thinking seems to be inherited from other people—hardly a creative, courageous identity). When I called him out, he said he was just being provocative. I pointed out that “Provocative is ‘famine is terrible and so let’s all eat babies.’ Insulting is ‘no one is working on famine because everyone in your profession is cowardly and uncreative and stupid.’”

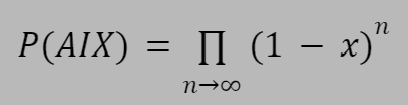

Inelegant showmanship aside, I want to discuss claim 3 and the core idea of existential AI threat. What exactly is this complex, genius-demanding equation others are “not smart enough” to understand:

where x is the probability of Skynet on any given day, n is the number of days, and P(AIX) is the probability of our survival. No matter how small one assumes x to be, if it’s greater than zero the equation converges to P(AIX) = 0 and we all die. Any of you readers with a little comfort reading equations can see that this is a simple exponential, not a groundbreaking mathematical insight nor a model of any kind of natural phenomenon. From macroeconomics to ecological processes to neural computation, you play with toy models like these to begin exploring an idea, not to seriously discuss real world phenomena or global policy. Real natural processes have complex dynamics and feedback loops. Exponentials aren’t just lazy, in the long run they are nonexistent. Yet somehow this is the great and inevitable insight of the AI existential risk crowd [2].

[1] Also not in science fiction.

[2] This is also the insight of the Fermi Paradox with x representing the chance for intelligent life on a planet and n, the number of planets. Aliens and AGIs may be out there, but they sure as shit aren’t predicted by this sort of lazy thinking. In fact, the collision of these two napkin backs means alien super AGIs will destroy us no matter what we do.

Read the rest when Robot-Proof hits the shelves!

| Follow more of my work at | |

|---|---|

| Socos Labs | The Human Trust |

| Dionysus Health | Optoceutics |

| RFK Human Rights | GenderCool |

| Crisis Venture Studios | Inclusion Impact Index |

| Neurotech Collider Hub at UC Berkeley |